On hiring

Creating scalable, consistency and noise-free interview evaluations.

For me, interviews are less about the answer, and more about the process. I’m less interested if a candidate knows a given technology, or had a certain experience. I want to see how a candidate thinks, how they approach a problem. I want to see how they react if they see something new, do they use it as a learning opportunity? Do they apply that later in the interview?

In this article, I want to discuss hiring processes. I’ve come from a software engineering background, and have interviewed hundreds if not thousands of software engineers, and likely hired over a hundred. I’ve also been involved in hiring processes and in the interviews for product managers, product designers and program managers as well as people managers and senior leadership. This has a leaning towards hiring for software engineers, but I believe is generally applicable.

Bias, and noise

There has been a lot of focus on bias over the years, and Daniel Kahneman recently introduced us to the concept of noise through his book of the same name along with co-authors Olivier Sibony, and Cass Sunstein. This article doesn’t go into much detail about removing biases which might lead to actions like anonymising applications. This looks at the interview and decision making processes, and how to reduce noise.

Think of a target. The bottom left of this illustration shows no bias or noise - all shots right in the centre. When there is an off-centre cluster, that can be seen as a visualisation of bias, yet lacking noise. You’re being pulled in a certain direction. When the shots are scattered with no real pattern, this is noise. This can still demonstrate bias (see top right) or unbiased noise (top left).

Kahnaman, Sibony and Sunstein describe three types of noise: level, pattern and occasion.

Level noise can be seen in scoring charts, say where we are asked for a 1-5 rating. One person might give a 5/5 because the expectation was met, whereas another person might only give 5/5 where expectation was exceeded. Without a definition, a rubric, there will be noise.

Pattern noise is where two individuals make differing judgements on the same input. This might be medical professionals making different diagnosis and treatment plans, judges issuing a sentence, or interviewers favouring differing ways of solving a problem.

Occasion noise is where a different decision is made depending on the situation, such as before or after eating, or in a rush vs taking time.

Create a level playing field

Initial impressions can unduly influence decisions. Have you been in interviews which start with an informal chat at the start? That can lead to different questions being asked, and if this happens we’re not really able to fully compare two candidates. If that conversation leads to a subconscious leaning towards that candidate, then the halo effect bias has come into play. This is where this initial (positive or negative) impression might help amplify a later decision (“that candidate is really good/bad”) or compensate for any later areas (“the answer wasn’t great, but I’m prepared to overlook it”).

Humans are pattern matching machines, and we also look for cohesion, we like to make sense of things. We can be guilty of seeing patterns in meaningless data, and assigning meaning where there is none. These can all lead to increased subjectivity in the ultimate decision to make a hire.

We want to compare candidates on a level playing field. In order to do this, we want to ensure we are using the same process and the same questions for all candidates within a batch. Now we might run a batch, make changes and then run another batch, but changing the process within a batch will cause problems.

What I’m outlining here is more about scaling and consistency of process. If you can create a process with a good job description, not a compromised wish list trying to please everyone; a consistent interview, and well defined scorecards, training new interviewers becomes easier. Some might say they prefer to ask their own questions. but when relying on the individual interviewers, you may come to depend on the same interviewers over and over, and could be subject to the Dunning-Kruger effect, whereby those with lower experience overestimate their ability.

The interview

When constructing the interview, we want to offer evaluators predefined questions to ensure candidates can later be compared, and we want to offer examples of great, good and average responses to those questions. This reduces the level noise from evaluators.

We also want to consider a “work sample task”, something which is representative of work that would be undertaken as part of the job. These are often the best predictor of success in the role. Some roles and also levels are easier than others to create these exercises for.

For software engineers, an example of a task based interview might be the take-home test. A candidate is given an exercise and asked to complete it in a given amount of time before submitting the source code for evaluation. The evaluator can never be sure how long a candidate took. Did they do it in a rush? Did they work on it full-time for a while, or outsource it to a generative AI tool? What if they solved the problem asked, but did so in a way that was unfamiliar or unliked to the evaluator?

It might take longer, but my preference is for collaborative, pair-programming, interviews and to combine this with an approach that takes a candidate down a path that leads to the same output, or near enough, for each candidate. This helps to reduce the pattern noise of preferring one way or another.

Scorecards

In an effort to reduce level noise, we aim to give evaluators a structured scorecard with detailed explanation of what it means to achieve a score. In this example a radio button is used so that the evaluator can select one and only one answer.

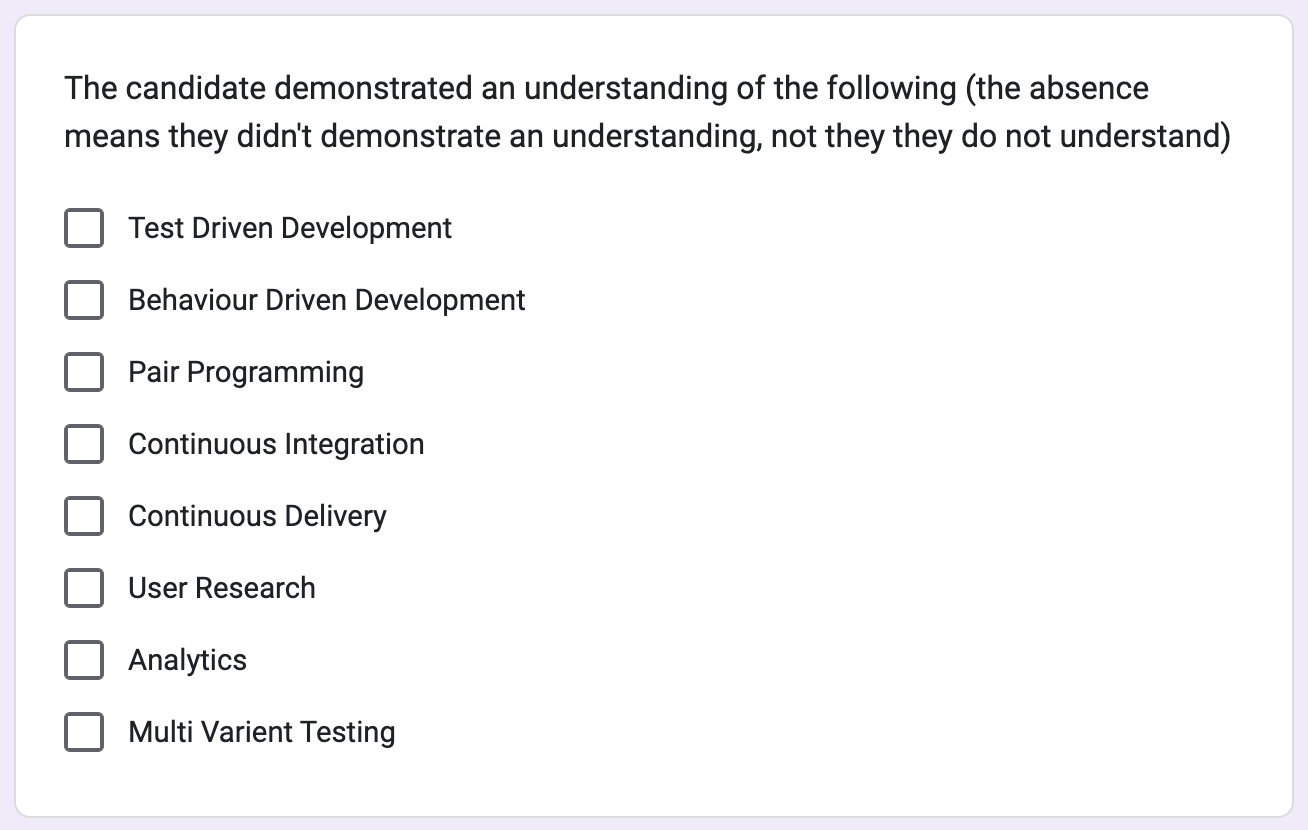

In this example, the evaluator is offered the ability to “check all that apply”. This loses the nuance and fidelity of the radio buttons, but can be useful.

A variation of this might be a grid format, but with an explanation of what each means.

The benefits of offering such a structured scorecard is a reduction in interview training. The scorecard guides the evaluator through the process. The downside is that should any deviation be needed (although generally not desired), it might be difficult for the evaluator to improvise.

By using such scorecards we can reduce level noise through well defined measurements for each area of evaluation. We can tackle pattern bias here through removing the actual hiring decision away from the evaluator and moving that up to the hiring manager - or hiring panel.

The decision

Then through the use of decision hygiene techniques I’ve discussed before take that into a decision making meeting. I’ve assumed here there is a panel which may include the evaluators, managers, the recruiting team and the hiring manager.

For each candidate

Each member of the panel should review the scorecards for a candidate either before the meeting, or during silent reading time.

Think about their vote (yes or no to hire)

Write their decision down, on paper or digitally (you may also be using “Roman voting” thumbs up / thumbs down)

Submit their decision which might mean showing thumbs up or down, showing a piece of card or paper with the vote or having a facilitator show from an anonymous digital submission

If the decision is unanimous, proceed

Else discuss any differences in voting and then repeat the voting process until there is consensus on a decision.

Wrap-up

It wasn’t that long ago where there were more jobs than candidates, and people would talk about “the war for talent”. Given what great downsizing in the tech industry over the last 6 months I think it’s safe to say the war is over. I’m not convinced there was a winner. As the industry moves towards efficiency over growth, it’s a great time to review the hiring process - especially before you actually need to be hiring - to conduct a noise audit, review decision hygiene, and build more objective and repeatable hiring processes.

Further reading

Noise by Kahneman, Sibony, and Sunstein

Work Rules by Laszlo Bock

Karat has a great write up of building structured scorecards